Walking Noise

We are happy to announce a new contribution to ECLM 2024 by Hendrik Borras*, Bernhard Klein*; and Holger Fröning.

*: These authors share first authorship, with different emphasis on methodology, experimentation, data analysis and research narrative.

Noisy Computations - An inevitable stepping stone on the path towards lowering DNN energy needs?

Deep neural networks show remarkable success in various applications.

However, they unfortunately exhibit both high computational and energy demands.

This is exacerbated by stuttering technology scaling, prompting the need for novel approaches to handle increasingly complex neural architectures.

Alternative computing technologies, e.g. analog computing, promise groundbreaking improvements in energy efficiency, but are inevitably fraught with noise and inaccurate calculations.

This requires countermeasures to ensure functionally correct results like any kind of unsafe optimization.

Potential approaches that enable mitigation of such noise or allow networks to tolerate it could thus lead to more energy efficient, and - given a fixed power budget - more time efficient neural network training and inference.

At the same time lowering the energy cost of training and deploying neural networks is of utmost importance, be it to enable larger models or to decrease the CO2 footprint associated with them.

These topics are thus an important point of study in our research group.

In line with this effort, our recently accepted contribution is an abstract look at the implications of such noise on neural networks.

The presented approach is applied to neural network classifiers as representative workloads with the specific goal of studying the impact of noisy computations on accuracy.

Our approach

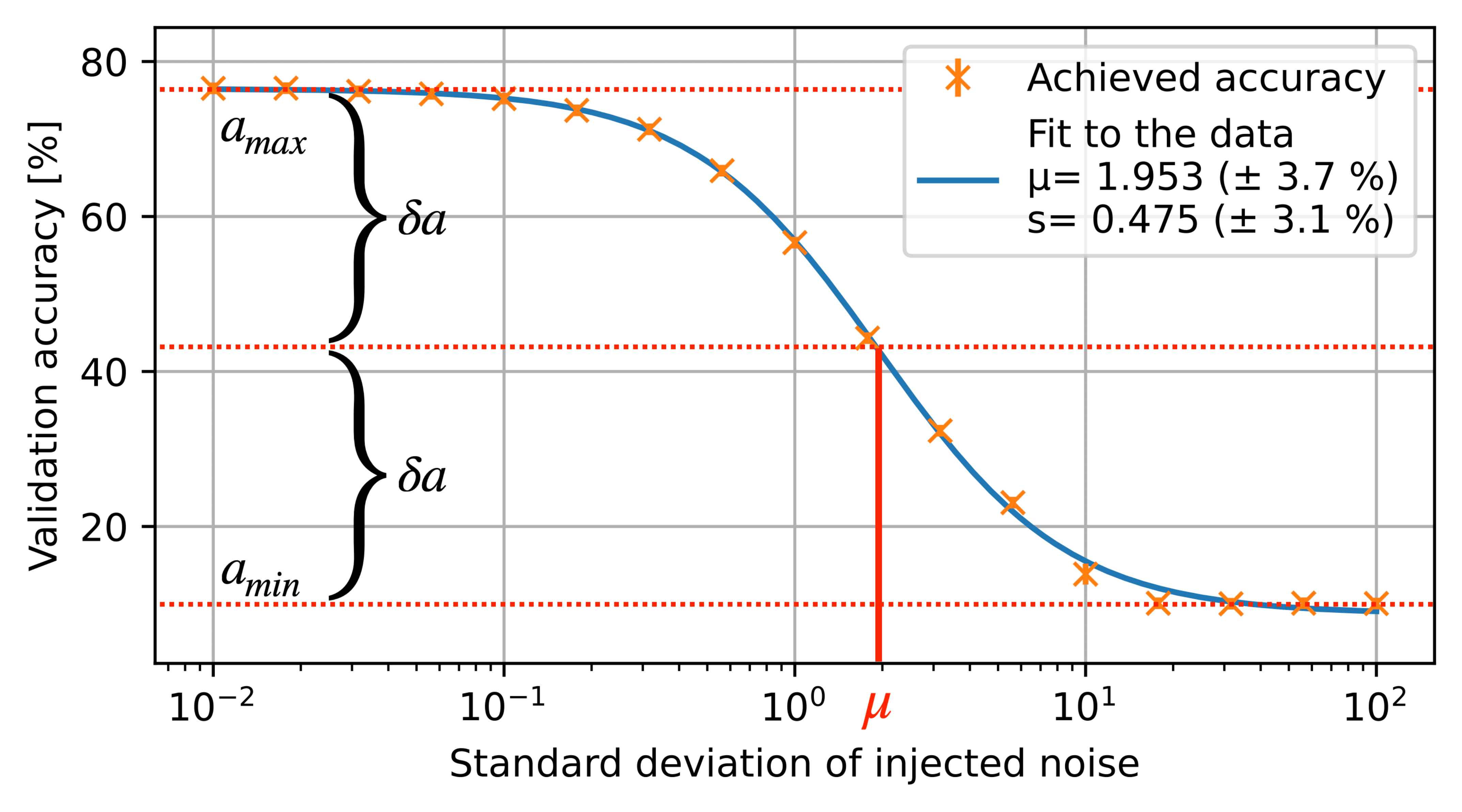

To achieve this, we introduce Walking Noise, a method of injecting layer-specific noise to measure robustness and to provide insights on learning dynamics.

More specifically, we investigate the implications of additive, multiplicative and mixed noise for different classification tasks and model architectures.

While noisy training significantly increases robustness for all noise types, we observe in particular that it results in increased weight magnitudes.

This inherently improves the signal-to-noise ratio for additive noise injection.

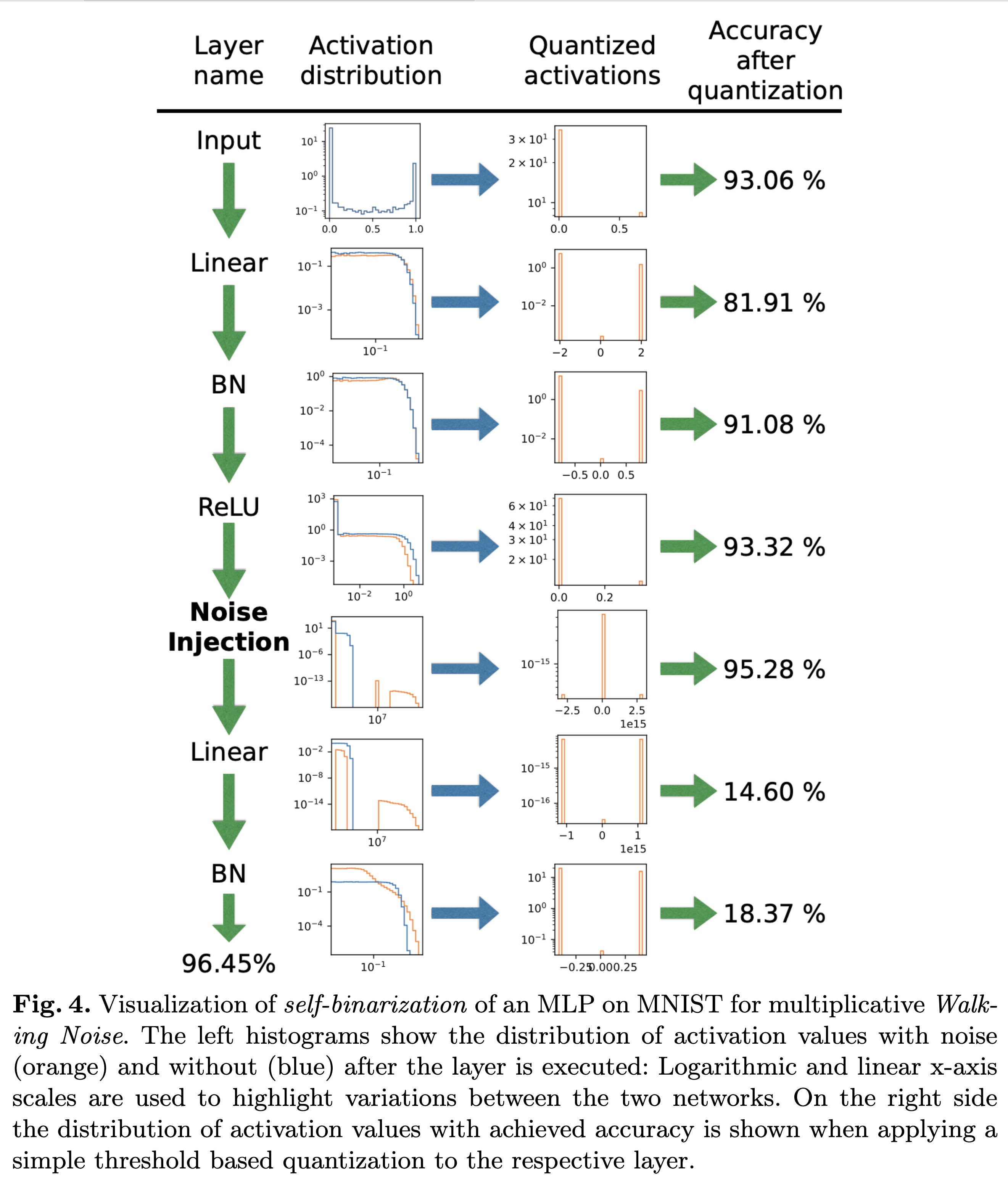

Contrarily, training with multiplicative noise can lead to a form of self-binarization of the model parameters, leading to extreme robustness.

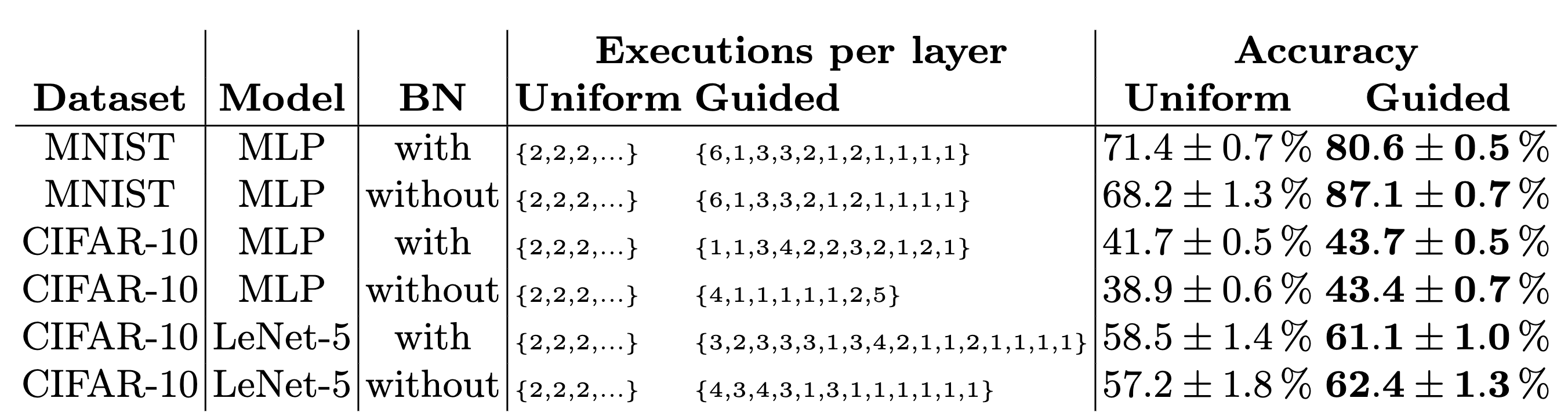

We conclude with a discussion of the use of this methodology in practice, among others, discussing its use for tailored multi-execution in noisy environments.

Major Takeaways

An important insight gained is the self binarization effects we observed for certain types of noise. They are able to mitigate arbitrarily large amounts of noise while maintaining a working neural network. Shown below is a example of these effects on a sample neural network with a specific look at the activation structure.

Our work additionally lays out methods for using Walking Noise in practical settings to effectively combat the effects of noise on neural network inference.

Distributing multiple executions across an analog accelerator in an intelligent manner as shown below can greatly benefit the predictor accuracy.

Find Out More

Take a look at the paper accepted for ECML 2024: ECML 2024 accepted research papers

Or checkout the arxiv pre-print: arXiv pre-print